How Smallpox Was Wiped Off the Planet By a Vaccine and Global Cooperation

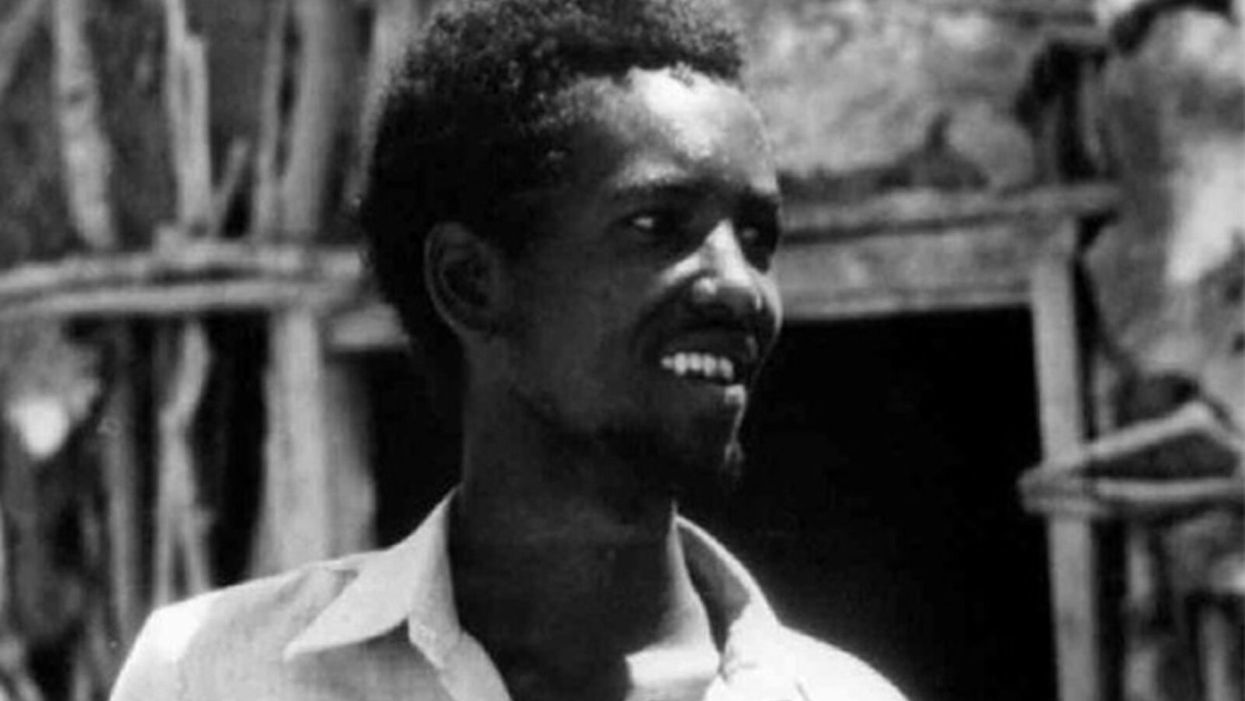

The world's last recorded case of endemic smallpox was in Ali Maow Maalin, of Merka, Somalia, in October 1977. He made a full recovery.

For 3000 years, civilizations all over the world were brutalized by smallpox, an infectious and deadly virus characterized by fever and a rash of painful, oozing sores.

Doctors had to contend with wars, floods, and language barriers to make their campaign a success.

Smallpox was merciless, killing one third of people it infected and leaving many survivors permanently pockmarked and blind. Although smallpox was more common during the 18th and 19th centuries, it was still a leading cause of death even up until the early 1950s, killing an estimated 50 million people annually.

A Primitive Cure

Sometime during the 10th century, Chinese physicians figured out that exposing people to a tiny bit of smallpox would sometimes result in a milder infection and immunity to the disease afterward (if the person survived). Desperate for a cure, people would huff powders made of smallpox scabs or insert smallpox pus into their skin, all in the hopes of getting immunity without having to get too sick. However, this method – called inoculation – didn't always work. People could still catch the full-blown disease, spread it to others, or even catch another infectious disease like syphilis in the process.

A Breakthrough Treatment

For centuries, inoculation – however imperfect – was the only protection the world had against smallpox. But in the late 18th century, an English physician named Edward Jenner created a more effective method. Jenner discovered that inoculating a person with cowpox – a much milder relative of the smallpox virus – would make that person immune to smallpox as well, but this time without the possibility of actually catching or transmitting smallpox. His breakthrough became the world's first vaccine against a contagious disease. Other researchers, like Louis Pasteur, would use these same principles to make vaccines for global killers like anthrax and rabies. Vaccination was considered a miracle, conferring all of the rewards of having gotten sick (immunity) without the risk of death or blindness.

Scaling the Cure

As vaccination became more widespread, the number of global smallpox deaths began to drop, particularly in Europe and the United States. But even as late as 1967, smallpox was still killing anywhere from 10 to 15 million people in poorer parts of the globe. The World Health Assembly (a decision-making body of the World Health Organization) decided that year to launch the first coordinated effort to eradicate smallpox from the planet completely, aiming for 80 percent vaccine coverage in every country in which the disease was endemic – a total of 33 countries.

But officials knew that eradicating smallpox would be easier said than done. Doctors had to contend with wars, floods, and language barriers to make their campaign a success. The vaccination initiative in Bangladesh proved the most challenging, due to its population density and the prevalence of the disease, writes journalist Laurie Garrett in her book, The Coming Plague.

In one instance, French physician Daniel Tarantola on assignment in Bangladesh confronted a murderous gang that was thought to be spreading smallpox throughout the countryside during their crime sprees. Without police protection, Tarantola confronted the gang and "faced down guns" in order to immunize them, protecting the villagers from repeated outbreaks.

Because not enough vaccines existed to vaccinate everyone in a given country, doctors utilized a strategy called "ring vaccination," which meant locating individual outbreaks and vaccinating all known and possible contacts to stop an outbreak at its source. Fewer than 50 percent of the population in Nigeria received a vaccine, for example, but thanks to ring vaccination, it was eradicated in that country nonetheless. Doctors worked tirelessly for the next eleven years to immunize as many people as possible.

The World Health Organization declared smallpox officially eradicated on May 8, 1980.

A Resounding Success

In November 1975, officials discovered a case of variola major — the more virulent strain of the smallpox virus — in a three-year-old Bangladeshi girl named Rahima Banu. Banu was forcibly quarantined in her family's home with armed guards until the risk of transmission had passed, while officials went door-to-door vaccinating everyone within a five-mile radius. Two years later, the last case of variola major in human history was reported in Somalia. When no new community-acquired cases appeared after that, the World Health Organization declared smallpox officially eradicated on May 8, 1980.

Because of smallpox, we now know it's possible to completely eliminate a disease. But is it likely to happen again with other diseases, like COVID-19? Some scientists aren't so sure. As dangerous as smallpox was, it had a few characteristics that made eradication possibly easier than for other diseases. Smallpox, for instance, has no animal reservoir, meaning that it could not circulate in animals and resurge in a human population at a later date. Additionally, a person who had smallpox once was guaranteed immunity from the disease thereafter — which is not the case for COVID-19.

In The Coming Plague, Japanese physician Isao Arita, who led the WHO's Smallpox Eradication Unit, admitted to routinely defying orders from the WHO, mobilizing to parts of the world without official approval and sometimes even vaccinating people against their will. "If we hadn't broken every single WHO rule many times over, we would have never defeated smallpox," Arita said. "Never."

Still, thanks to the life-saving technology of vaccines – and the tireless efforts of doctors and scientists across the globe – a once-lethal disease is now a thing of the past.

A woman receives a mammogram, which can detect the presence of tumors in a patient's breast.

When a patient is diagnosed with early-stage breast cancer, having surgery to remove the tumor is considered the standard of care. But what happens when a patient can’t have surgery?

Whether it’s due to high blood pressure, advanced age, heart issues, or other reasons, some breast cancer patients don’t qualify for a lumpectomy—one of the most common treatment options for early-stage breast cancer. A lumpectomy surgically removes the tumor while keeping the patient’s breast intact, while a mastectomy removes the entire breast and nearby lymph nodes.

Fortunately, a new technique called cryoablation is now available for breast cancer patients who either aren’t candidates for surgery or don’t feel comfortable undergoing a surgical procedure. With cryoablation, doctors use an ultrasound or CT scan to locate any tumors inside the patient’s breast. They then insert small, needle-like probes into the patient's breast which create an “ice ball” that surrounds the tumor and kills the cancer cells.

Cryoablation has been used for decades to treat cancers of the kidneys and liver—but only in the past few years have doctors been able to use the procedure to treat breast cancer patients. And while clinical trials have shown that cryoablation works for tumors smaller than 1.5 centimeters, a recent clinical trial at Memorial Sloan Kettering Cancer Center in New York has shown that it can work for larger tumors, too.

In this study, doctors performed cryoablation on patients whose tumors were, on average, 2.5 centimeters. The cryoablation procedure lasted for about 30 minutes, and patients were able to go home on the same day following treatment. Doctors then followed up with the patients after 16 months. In the follow-up, doctors found the recurrence rate for tumors after using cryoablation was only 10 percent.

For patients who don’t qualify for surgery, radiation and hormonal therapy is typically used to treat tumors. However, said Yolanda Brice, M.D., an interventional radiologist at Memorial Sloan Kettering Cancer Center, “when treated with only radiation and hormonal therapy, the tumors will eventually return.” Cryotherapy, Brice said, could be a more effective way to treat cancer for patients who can’t have surgery.

“The fact that we only saw a 10 percent recurrence rate in our study is incredibly promising,” she said.

Urinary tract infections account for more than 8 million trips to the doctor each year.

Few things are more painful than a urinary tract infection (UTI). Common in men and women, these infections account for more than 8 million trips to the doctor each year and can cause an array of uncomfortable symptoms, from a burning feeling during urination to fever, vomiting, and chills. For an unlucky few, UTIs can be chronic—meaning that, despite treatment, they just keep coming back.

But new research, presented at the European Association of Urology (EAU) Congress in Paris this week, brings some hope to people who suffer from UTIs.

Clinicians from the Royal Berkshire Hospital presented the results of a long-term, nine-year clinical trial where 89 men and women who suffered from recurrent UTIs were given an oral vaccine called MV140, designed to prevent the infections. Every day for three months, the participants were given two sprays of the vaccine (flavored to taste like pineapple) and then followed over the course of nine years. Clinicians analyzed medical records and asked the study participants about symptoms to check whether any experienced UTIs or had any adverse reactions from taking the vaccine.

The results showed that across nine years, 48 of the participants (about 54%) remained completely infection-free. On average, the study participants remained infection free for 54.7 months—four and a half years.

“While we need to be pragmatic, this vaccine is a potential breakthrough in preventing UTIs and could offer a safe and effective alternative to conventional treatments,” said Gernot Bonita, Professor of Urology at the Alta Bro Medical Centre for Urology in Switzerland, who is also the EAU Chairman of Guidelines on Urological Infections.

The news comes as a relief not only for people who suffer chronic UTIs, but also to doctors who have seen an uptick in antibiotic-resistant UTIs in the past several years. Because UTIs usually require antibiotics, patients run the risk of developing a resistance to the antibiotics, making infections more difficult to treat. A preventative vaccine could mean less infections, less antibiotics, and less drug resistance overall.

“Many of our participants told us that having the vaccine restored their quality of life,” said Dr. Bob Yang, Consultant Urologist at the Royal Berkshire NHS Foundation Trust, who helped lead the research. “While we’re yet to look at the effect of this vaccine in different patient groups, this follow-up data suggests it could be a game-changer for UTI prevention if it’s offered widely, reducing the need for antibiotic treatments.”